This is a log of stuff I did w/ one of my random pet projects, it’s not terribly interesting and is mostly just so I can get into the habit of writing more. You have been warned.

Working on mealplanner again, this time I finished getting most of the recipes imported, along with some quality of life improvements to my developer process.

Developer Iteration Cycle Improvements

Last time I coded the import but found several PDFs that couldn’t be imported, and was going to add some tests using them to make sure the import process works with them.

There were a couple issues w/ the development/test setup, however, that makes running and fixing tests very annoying.

First, the auto reload that’s supposed to happen when launching a Tauri app in development mode and making change to source files, didn’t. This seems to be a problem w/ the npm create-app for Tauri, it never worked.

Turns out the browser Tauri launched was caching the file, so if the bundled file’s contents changed, nothing would update in the browser on reload. Easily fixed by adding a cache buster to the bundled file:

// cache busting so the page actually reloads new JS when running the dev server

config.output.filename('[hash].js');

config.output.chunkFilename('[chunkhash].js');

The second problem was w/ chai.js. I use deep equal asserts quite often (all the time), because, well, they’re quick to write and cover everything I need in testing an expected result. But chai.js doesn’t provide useful output when a test fails.

Essentially, the entire actual object and expected object are turned into JSON-ish text, truncated and displayed on

the same line in the error output. So if there are a couple differences in a large data structure, there’s no way

to tell where and what they are, without sticking some console.log()s just to grab the values. Then you have to

actually compare them by looking at them. It’s slow, boring and extremely frustrating.

I checked and there’s actually an issue about this in the chai.js repo, but… it’s an older test library (which I’m using because I have to run the tests in a Tauri browser, not through node, so I can’t use anything newer) and that issue hasn’t been updated in years. In fact, it doesn’t look like anything has.

Fortunately, chai.js is very easy to extend. So I added my own custom deep equal that relies on the json-diff package:

import * as chai from 'chai';

import { diffString } from 'json-diff';

import Convert from 'ansi-to-html';

const convert = new Convert();

// add a deep equal extension to chai that uses json-diff for a prettier display

// eslint-disable-next-line @typescript-eslint/no-explicit-any

chai.Assertion.addMethod('deepJsonEqual', function deepJsonEqual(expected: any) {

const actual = this._obj; // eslint-disable-line no-underscore-dangle

let diffResult = convert.toHtml(diffString(expected, actual));

// mocha just displays the spans escaped, so we can't have colors here :(

diffResult = diffResult.replace(/<\/?span[^>]*?>/g, '');

this.assert(

diffResult.length === 0,

`expected #{this} to be deep equal to #{act}, but found differences:\n${diffResult}`,

'expected #{this} to not be deep equal to #{act}, but found no differences',

expected,

actual,

);

});

declare global {

namespace Chai { // eslint-disable-line @typescript-eslint/no-namespace

interface Assertion {

// eslint-disable-next-line @typescript-eslint/no-explicit-any

deepJsonEqual(expected: any): void;

}

}

}

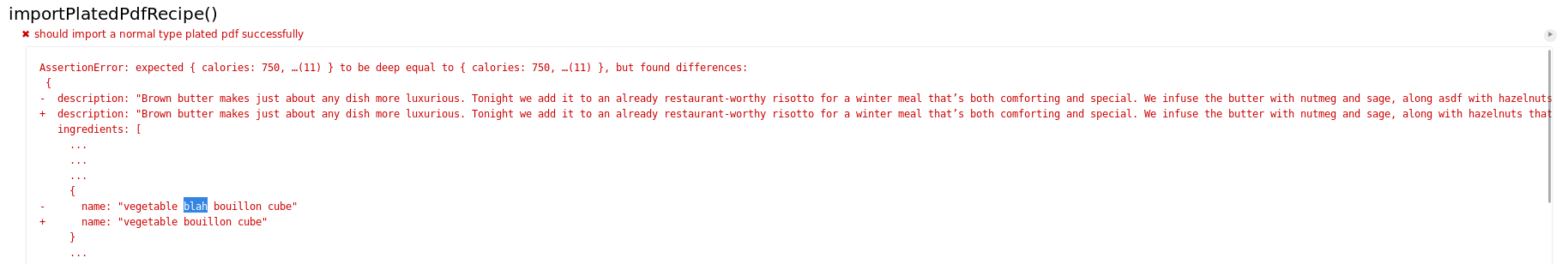

This gives me a MUCH better error message when a test fails:

These two changes made the cycle of run tests, interpret output, make change, repeat, far less time consuming.

Getting the import working

Once the test cycle improvements were done, I added tests for files that couldn’t be formatted and got them to work. Except for one of the files, turns out it was just a PDF with two giant images for each page. Definitely not going to waste time importing that now.

Anyway, finished this session with a complete, imported database (minus a handful of recipes I skipped). The result is a sqlite database that’s about 1.5 gigabytes. Which is… a problem.

I’m not going to distribute the app w/ the plated recipe database, but it will need to be downloaded and stored locally, and 1.5 gigs is just way to fucking big. To download or to just have locally. I mean, it’s just a bunch of recipes.

So making the database smaller is definitely something I’ll need to do. Eventually.

Right now, I want to get to a functioning app as quickly as possible, because, well, I need it. I really, really need to stop eating takeout/stir-frys and I definitely don’t have the time to look for recipes, evaluate them for nutrition, create shopping lists, shop, cook and do everything else I need to do in a day. And I don’t think anyone should need to pay for something this basic. But I’ve talked about all this before haven’t I?

Next on my to-do list then, will be getting the recipes that were imported displaying in the small UI that currently exists.

Also, I just realized the repo was private on gitlab. It’s public now :)